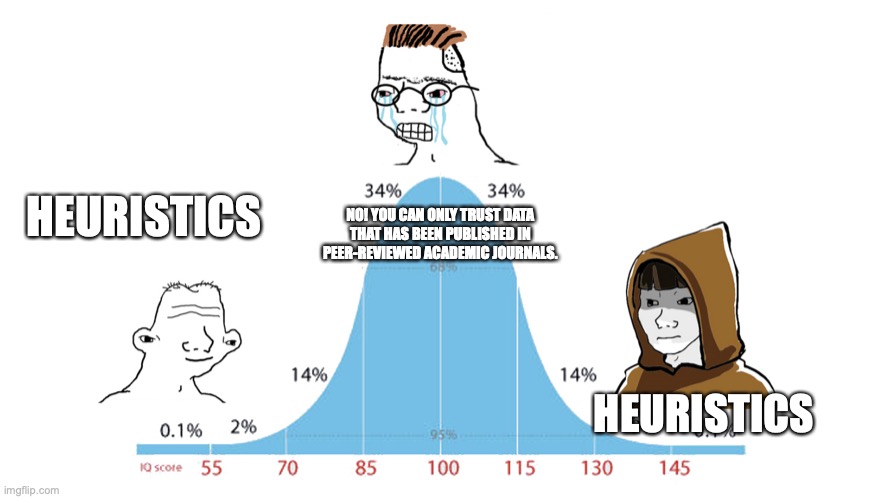

Eventually you come back to heuristics, only better ones.

I used to think that, eg Christian ethics was dumb, or the people who followed it were dumb. Because instead of trying to decide what was right or wrong (is abortion okay?), true or false (is man-made activity causing the climate to change?), they just did whatever some old book told them, or whatever some credentialed expert in the old book told them the old book said about said topic.

Stupid.

Instead, people should just be open to all ideas and judge situations on a case by case basis.

Also, stupid. Better yet, incredibly time-consuming to the point of debilitating. So heuristics it is.

Good rules and bad rules

The following tweet is instructive of two popular heuristics I see otherwise smart people do a lot: qui bono and the black box fallacy.

I have to assume the “carry water for the rich” line is in reference to Yglesias’ YIMBYism. There are countless examples of how policies that impact building homes impacts homelessness and the cost of housing, so I have to assume people against YIMBYism have low levels of information. So Sammon relies on the qui bono heuristic; if bad/evil people (ie rich developers) benefit then it must be bad. Which, it should go without saying, is a dumb heuristic.

I used to think that, eg Christian ethics was dumb, or the people who followed it were dumb. Because instead of trying to decide what was right or wrong (is abortion okay?), true or false (is man-made activity causing the climate to change?), they just did whatever some old book told them, or whatever some credentialed expert in the old book told them the old book said about said topic.

Stupid.

Instead, people should just be open to all ideas and judge situations on a case by case basis.

Also, stupid. Better yet, incredibly time-consuming to the point of debilitating. So heuristics it is.

Good rules and bad rules

The following tweet is instructive of two popular heuristics I see otherwise smart people do a lot: qui bono and the black box fallacy.

Yglesias has strong opinions and rising prestige, as evidenced by his popular Substack. So what does the gossip trap phenomenon tell us? People will respond by trying to pull him back in the bucket with the rest of us.The explainer's dilettantish features are nicely surmised, but it's also worth adding that Yglesias is deeply incurious and a bad reporter, which is why he constantly gets stuff wrong (basis points, SBF, Santos, Andrew Yang as NYC mayor, etc).

— Alex Sammon (@alex_sammon) January 11, 2023

I have to assume the “carry water for the rich” line is in reference to Yglesias’ YIMBYism. There are countless examples of how policies that impact building homes impacts homelessness and the cost of housing, so I have to assume people against YIMBYism have low levels of information. So Sammon relies on the qui bono heuristic; if bad/evil people (ie rich developers) benefit then it must be bad. Which, it should go without saying, is a dumb heuristic.

(After finding a way to circumvent the WaPo paywall and reading the story, it's more likely that the line is in reference to the story's insinuation that Yglesias has an inside track to influential politicians and writes blog posts that they want him to write; an unproven assertion that seems, I dunno, deeply incurious. But I have read others who argue that YIMBYism is bad simply because developers benefit. So the critique still stands, it just applies to people not mentioned here).

Black Box

The other bad heuristic is what I’m calling the black box fallacy, a reference to a SSC post. Basically, there are countless geniuses who had really dumb ideas. So dismissing Newtonian physics just because Sir Isaac thought there were hidden codes in the Bible would be a bad idea.

Elsewhere in the article, the journalist praises Revolving Door Project, "which saw right through Sam Bankman-Fried." Good for you, Revolving Door Project. My question is: did you have information no one else did (highly unlikely) or do you have an "all tech people are committing fraud" heuristic (probably)? If the latter, I think you're going to be wrong more often than Yglesias. You just happened to be right w/r/t SBF. I'd be impressed if Revolving Door Project also predicted the Black Lives Matter fraud, but my guess is they were too busy targeting tech billionaire to notice.

Black Box

The other bad heuristic is what I’m calling the black box fallacy, a reference to a SSC post. Basically, there are countless geniuses who had really dumb ideas. So dismissing Newtonian physics just because Sir Isaac thought there were hidden codes in the Bible would be a bad idea.

I highly doubt Mr. Sammon has a statistical analysis of the errors of journalists that shows Yglesias is among the worst. So he has low information here. Instead, Sammon is probably highly sensitive to the topics in which he’s discovered an Yglesias error, which is really just committing the availability heuristic, (ie his opinion of Yglesias is informed by the blog posts most readily available in Sammon's mind, the ones where he has discovered an error).

But what Sammon is really getting at here is an attack on Yglesias’ growing prestige, since Yglesias seems highly influential in areas that are, I imagine, at odds with Sammon’s beliefs. Sammon can’t possibly know that Yglesias is wrong in every subject he writes about, so Sammon relies on the black box fallacy: if he was really wrong in these instances, then he must be wrong everywhere and can be dismissed without further inquiry.

But more importantly, and I'm definitely impugning motives here, I get the sense that Sammon wants you to think that, because Yglesias was wrong in these instances, he must be wrong everywhere and can be dismissed without further inquiry. He wants to yank down Matt's prestige and influence.

Think Less

I write about situations I find interesting and I find them interesting because I’m not sure what the answer is. But I want to know what the answer is because I want to live my life. So I run through all these scenarios, not because I think judging everything with an open mind, on a case by case basis, is the right approach. I’m doing it to build out a moral framework so, like the Christians and their old book, I can consult my old framework for my response and not have to think.

Going back to the meme I posted at the top, I’m trying to develop heuristics that are are better than the Kahneman default heuristics.

But what Sammon is really getting at here is an attack on Yglesias’ growing prestige, since Yglesias seems highly influential in areas that are, I imagine, at odds with Sammon’s beliefs. Sammon can’t possibly know that Yglesias is wrong in every subject he writes about, so Sammon relies on the black box fallacy: if he was really wrong in these instances, then he must be wrong everywhere and can be dismissed without further inquiry.

But more importantly, and I'm definitely impugning motives here, I get the sense that Sammon wants you to think that, because Yglesias was wrong in these instances, he must be wrong everywhere and can be dismissed without further inquiry. He wants to yank down Matt's prestige and influence.

Think Less

I write about situations I find interesting and I find them interesting because I’m not sure what the answer is. But I want to know what the answer is because I want to live my life. So I run through all these scenarios, not because I think judging everything with an open mind, on a case by case basis, is the right approach. I’m doing it to build out a moral framework so, like the Christians and their old book, I can consult my old framework for my response and not have to think.

Going back to the meme I posted at the top, I’m trying to develop heuristics that are are better than the Kahneman default heuristics.

Here is one that I like: I call it the boring heuristic. If something seems off, and I have low information, instead of attributing it to something like malice, conflict, corruption, or stupidity, I try to come up with the most boring explanation possible and stick with that.

People in my town are mad that the town is slow to respond to the removal of public trees. We don't have a lot of information on the workings of our local government, so we rely on heuristics for an explanation. Most people default to the evil/stupid fallacy: we have incompetent, lazy people who don't respond to requests or we have corrupt officials who are laundering tax dollars into their own pockets and claiming that there isn't enough money.

But a more boring explanation like: "There just isn't a lot of money in local government budgets and the town can only remove so many trees in a fiscal year," just seems more plausible to me.

He was right even when he was wrong

I also have a "people with reputations and money on the line are usually right" heuristic. The Matt Yglesias piece in question is paywalled but my super-sleuthing revealed this line from the story:

"At age 21, Yglesias was laying out the logician’s case for the invasion of Iraq, because how could the most powerful, informed men on Earth be so stupid? In May of this year, Yglesias declared that Bankman-Fried “is for real,” because why else would wealthy people risk their money?"With hindsight, we can see he was wrong in both instances. But if your heuristic for distrusting the Iraq invasion is "never listen to the government" then you're probably going to be wrong a lot and will probably die from a preventable disease for which we have readily available vaccines.

Elsewhere in the article, the journalist praises Revolving Door Project, "which saw right through Sam Bankman-Fried." Good for you, Revolving Door Project. My question is: did you have information no one else did (highly unlikely) or do you have an "all tech people are committing fraud" heuristic (probably)? If the latter, I think you're going to be wrong more often than Yglesias. You just happened to be right w/r/t SBF. I'd be impressed if Revolving Door Project also predicted the Black Lives Matter fraud, but my guess is they were too busy targeting tech billionaire to notice.

I was too worried about finding a framework that would never lead me astray. But that's silly. It's better to settle on heuristics that will be right most frequently over the long haul.

The only exception to these heuristics, other than having enough information to feel comfortable ignoring them, is the "What if I'm wrong?" question. Like, if following a heuristic leads me to believe Theory A rather than Theory B, I have to ask if choosing Theory A can have devestating consequences if I'm wrong.

Otherwise, I stick with my boring heuristics.

No comments:

Post a Comment